(This was originally published as #AirQualityEgg in Chicago… The First Deployment as a guest post on the Cosm.com blog, where it appeared with some additional edits by Ed Borden.)

On July 24 and 25, Air Quality Egg hackers from around the country gathered in Chicago for a quick sprint. Our objectives were to substantively advance the overall Air Quality Egg project while also developing a Chicago Egg community. Ed Borden, evangelist at Cosm.com and AQE project lead, had set an extremely ambitious agenda: solder 15 Nanode boards using the newest sensor configuration; modify, load, and test the on-board software; fabricate Egg enclosures; deploy sensors in the city of Chicago; and collect and attempt to visualize our data. It was a week’s worth of work and we had two days.

Community interest is tremendous and growing

Though we announced the Chicago Hackshop barely three weeks prior to the event date, we had enormous interest with many out-of-town attendees. Joe Saavedra and Ed Borden brought a large contingent from New York. David Holstius came from Berkeley, David Hsu from Penn. Richard Beckwith and Adam Laskowitz traveled from Portland. Matt Waite flew in from Lincoln, Nebraska. I flew in from D.C.

But interest from the City of Big Shoulders was the biggest reason we had such a successful event. The School of the Art Institute of Chicago donated its world-class facilities and equipment, which included a general workspace, surface-mount soldering equipment, and the laser cutters and materials used in the enclosure development. Argonne National Lab helped promote the event within their network and sent several attendees.

The City of Chicago was very excited about the project, and though we’d only give them a few days notice, they offered to help us host Eggs on city property and scrambled to find suitable locations (more on that in a second…). Employees of the U.S. E.P.A.’s Chicago office also dropped by, both out of general curiosity and to answer questions about the EPA’s own monitoring sensors and air quality data. Our group was diverse and cross-disciplinary.

Besides attracting folks from Chicago and beyond we had old and young, experienced Egg hackers and first-timers, and backgrounds that spanned environmental science, architecture, design, journalism, the humanities, engineering, and computer science, to name a few.

Deployment: people first

The physical requirements for the boards we used were wired Ethernet and power. We wanted to deploy near areas that were well-trafficked by people, with sensors placed at the height at which people congregate. Ideally, we wanted to be able to cluster many sensors close to each other and close to a calibrated EPA sensor to enable comparisons of Eggs vs. other Eggs and to a calibrated scientific-grade sensor.

Ed and Joe shared the people-first adoption approach that they’ve arrived at after months of experience. The Egg community is meant to extend far beyond those who attend hackathons. We need people who can adopt an Egg, deploy it at their home or work, maintain it over time, and share and promote the project over the long term.

Though we were very excited at the City’s gracious offer to host Eggs on city property in prime locations, given all of the uncertainty and new updates, what we needed most was committed volunteers to oversee each Egg. We stuck with the “people first, location second approach”. It has already proved to be a wise choice. We’ve had a few technical hiccups since the event, but the Eggs’ dedicated foster parents have made small adjustments and updated firmware.

Enclosure Fabrication

While the machine-fabricated Eggs for the Kickstarter campaign will use an injection molded case, Ed lamented that this would be the one part of the entire unit that DIY enthusiasts couldn’t build on their own. The team needed a case for the Chicago sensors, and this was the first crack at an open case design that could be downloaded and cut from simple materials or pre-cut and shipped as a kit for self-assembly.

The group wanted something beautiful and functional. The case needed adequate venting for the micro controller, maximum airflow for the air sensors, and adequate resistance to precipitation. When the team saw the first completed case, our jaws dropped. The enclosure group came up with something that was both functional and gorgeous, right down to the “www.airqualityegg.com” etching A few tweaks will be needed to increase water resistance and make it less likely to trap heat under direct sunlight, but it was an incredible endeavor for two days and something to build upon in the future. Like all components of the Egg project, the design files are open source.

Hardware

Miraculously, Joe cleared TSA security with a suitcase full of Nanode boards, sensors, and other assorted hardware. The Chicago sensors were built using the latest sensor configuration and contained on board temperature and humidity, as well as sensors for NO2 and CO. The bulk of the soldering was done by two high school students!

Joe’s team made software updates to adjust the voltage based on temperature and humidity, giving the boards a way to compensate according to climate conditions.

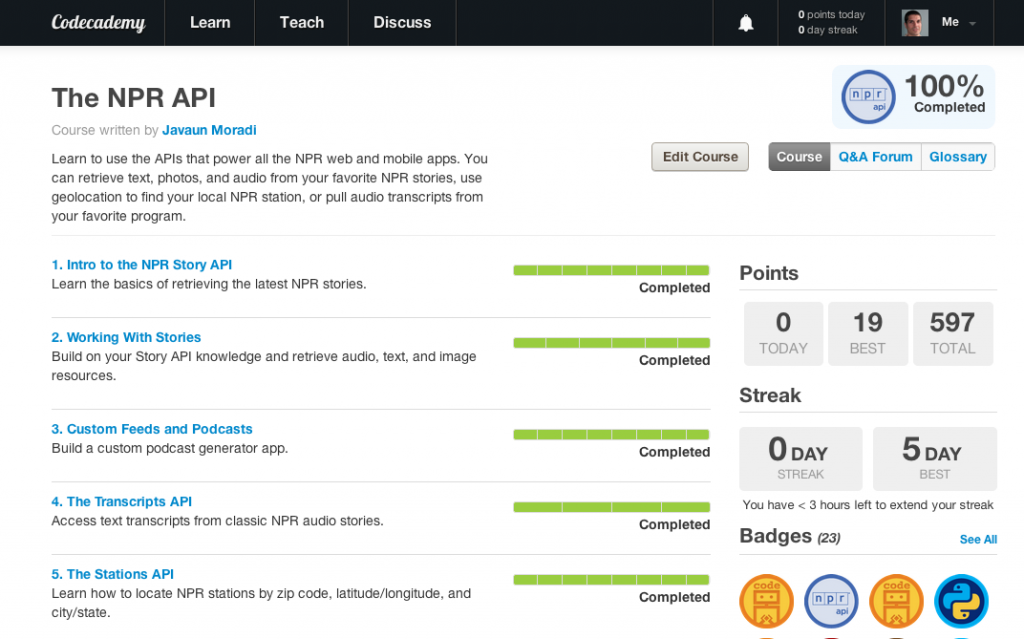

Data

While the rest of the team focused on building and deploying hardware, the data team had two full days to think about their task. First, we needed a yardstick by which to compare our Egg sensors. The closest official sensor was the Illinois EPA air sensor at 327 South Franklin Street in the Chicago loop. We worked with Tim Dye of SonomaTech, the company that manages air quality feeds for state EPA’s and the U.S. EPA. Tim gave us access the most granular hourly EPA data available, and we ingested itinto Cosm.com for ease of use. We started with a few questions we’d like to answer:

- How does Egg data compare to the EPA data?

- How can we compare Eggs for consistency?

- How does my environment compare to that of other Eggs?

- How can I explain what my Egg is doing to other people?

- How can I find other Eggs (or other people) like me?

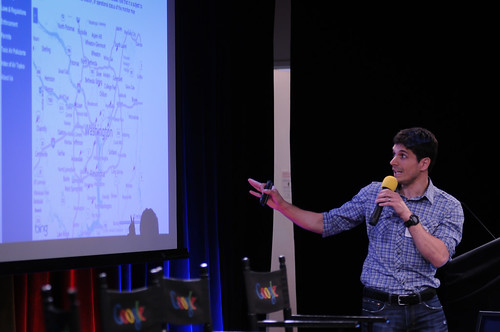

The group produced a few visualizations of Egg data (until the first Chicago Eggs were transmitting, we prototyped using a European Egg data feed) and EPA data using R and Processing, and created a few very basic maps.

Wrap Up

As we left for the airport, the sensors were all out for installation. We’re grateful to our Chicago hosts, especially to Robb Drinkwater of the School of the Art Institute (SAIC) who handled all of the logistics and organization, Rajesh Sankaran of Argonne, whose technical expertise was hugely valuable both at the event and in the subsequent care and feeding of the Eggs. Above all, thanks to Charlie Catlett of SAIC and Argonne, who grabbed onto the first mention of a Chicago hackathon and never let it go.

What’s next? The promise and challenge of citizen networks

Whether you’re a citizen enthusiast, a scientist, open data hacker, journalist, or general do-gooder, the idea of being able to look at local air quality measurements about your own surroundings, to visualize air conditions, contribute data to a larger community, and ultimately have a full picture of your region and others — is extremely compelling. Every task and every decision is in pursuit of this vision.

There are some real technical hurdles to achieving data quality, and it’s impossible to talk about the data without talking about the hardware itself. The inexpensive sensors on the Egg don’t actually measure carbon monoxide or nitrogen dioxide or any other air pollutants. They measure electrical resistance. A metal oxide sensor reacts with chemical compounds in the air, and that changes the overall resistance of the sensor. That’s all we can measure, and that’s why the raw readings coming off the sensor look like a generic “1234” and not “4 ppm”.

It gets more complicated. Temperature and humidity also affect sensor readings, which is why the Eggs have on-board sensors for both and attempt to compensate for fluctuations. A sensor for one pollutant may also be adversely affected by the presence of another — for example, NO2 and SO2 interfere with one another. EPA and other scientific sensors account for this and are also are regularly calibrated against a standard. It’s a precise, costly, time-consuming process, and not one that the Egg community seeks to replicate. Practically, this means that the Egg has no means to “zero” itself the way you might do with a bathroom scale before weighing yourself. Data quality is one of the greatest challenges the Air Quality Egg community faces. Different approaches are hotly debated every week on the Google Group. We won’t solve it in the next two months — let alone the two days we had in Chicago — but it’s a question that is perpetually on our minds.

After directional data accuracy, a big open question is how to make Egg data meaningful to people. Calibrated government and scientific sensor readings are verified for data quality and then translated into pollutant measurements, such as 8 ppb (parts per billion). While meaningful to scientists, the average citizen couldn’t tell you if such a reading is normal or a cause for concern. The U.S. EPA works with state air quality data to calculate a derived Air Quality Index (AQI) based on several measurements. The AQI translates to a color scale that typically fluctuates from green (healthy) to red (unhealthy) but does go all the way up to maroon (hazardous).We’re just scratching the surface on how to make data more meaningful and understandable to people. Can certain pollutants serve as “markers” of the current state? How do we communicate trend and concern, knowing that we won’t have the accuracy or authority of official measures? These are issues that we’ll continue to debate on the Google Group, as we prepare for the next Egg hackathon in Boston. Put October 11-13, 2012 on your calendar!